Trending topics

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

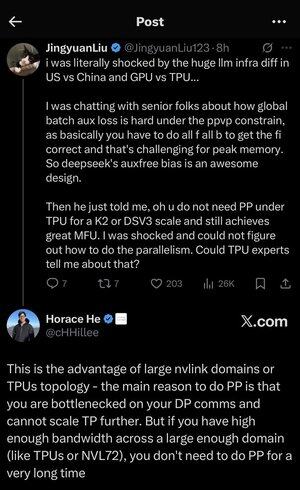

I got a bit lost in all the acronyms and jargon here, so I had Claude explain it without using any acronyms and now it all makes perfect sense (tldr; bandwidth ⟹ simplicity):

This is a fascinating technical discussion about training large language models at scale.

The Core Conversation

Jingyuan Liu is expressing surprise about discovering that you don't need certain complex optimization techniques when using TPUs (Tensor Processing Units - Google's specialized AI chips) versus GPUs (Graphics Processing Units - typically NVIDIA's chips).

Key Technical Concepts Explained:

Hardware Types:

•GPU (Graphics Processing Unit): Originally designed for graphics, now heavily used for AI. NVIDIA dominates this market.

•TPU (Tensor Processing Unit): Google's custom-designed chips specifically for machine learning.

Parallelism Strategies:

When training massive AI models, you need to split the work across many chips. There are several ways to do this:

1) Data Parallelism (DP): Each chip processes different batches of data with the same model copy

2) Tensor Parallelism (TP): The model's mathematical operations are split across chips

3) Pipeline Parallelism (PP): Different layers of the model are placed on different chips, creating a pipeline

The Technical Challenge Being Discussed:

The auxiliary loss problem: When training very large models, you often add "auxiliary losses" (additional training objectives) at intermediate layers to help gradients flow better through the network. Under PPVP (Pipeline Parallelism with Variable Partitioning) constraints, this becomes complex because:

...

Top

Ranking

Favorites