Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Victor M

🤗 Head of Product @huggingface

Victor M kirjasi uudelleen

You can now fine tune @Alibaba_Qwen Qwen-Image with AI Toolkit with 24 GB of VRAM using a custom trained accuracy recovery adapter that allows you to fine tune at 3 bit with minimal precision loss. The marked changes to the defaults should work for 3090/4090 GPUs. More in 🧵

15,77K

Victor M kirjasi uudelleen

I really really like @jandotai

It's a very friendly app to locally run LLMs, great for privacy

I've tried others like LM Studio and Ollama and they're nice but very engineer-built, a bit too difficult for me

Jan is simple and cute and pretty and a great alternative to talk to without sending your data (and secrets ;)) to big AI providers

You can even run remote provider models too via API, if you do want that!

Also they're very responsive to feedback and always improving the app

I think there is space for both locally run LLM apps and cloud LLM apps, locally run makes sense if you wanna talk about very private stuff, therapy etc. It's really important people can have that without fearing your data might leak in the future

(I'm not affiliated or paid, just really like it!)

218,32K

Victor M kirjasi uudelleen

Introducing Jan-v1: 4B model for web search, an open-source alternative to Perplexity Pro.

In our evals, Jan v1 delivers 91% SimpleQA accuracy, slightly outperforming Perplexity Pro while running fully locally.

Use cases:

- Web search

- Deep Research

Built on the new version of Qwen's Qwen3-4B-Thinking (up to 256k context length), fine-tuned for reasoning and tool use in Jan.

You can run the model in Jan, llama.cpp, or vLLM. To enable search in Jan, go to Settings → Experimental Features → On, then Settings → MCP Servers → enable a search-related MCP such as Serper.

Use the model:

- Jan-v1-4B:

- Jan-v1-4B-GGUF:

Credit to the @Alibaba_Qwen team for Qwen3 4B Thinking & @ggerganov for llama.cpp.

633,24K

Qwen-Image + Wan-2.2 = 🔥

Victor M12.8. klo 06.19

I'm so hyped that we can now generate videos of this quality in under 30 seconds. Open source for the win!

👇Try Wan 2.2 (with Lightning LoRA) on Hugging Face

1,18K

Victor M kirjasi uudelleen

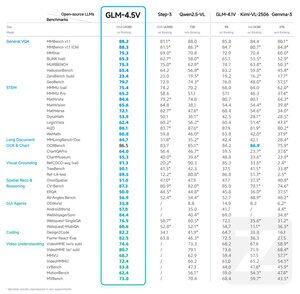

Introducing GLM-4.5V: a breakthrough in open-source visual reasoning

GLM-4.5V delivers state-of-the-art performance among open-source models in its size class, dominating across 41 benchmarks.

Built on the GLM-4.5-Air base model, GLM-4.5V inherits proven techniques from GLM-4.1V-Thinking while achieving effective scaling through a powerful 106B-parameter MoE architecture.

Hugging Face:

GitHub:

API:

Try it now:

264,76K

Johtavat

Rankkaus

Suosikit

Ketjussa trendaava

Trendaa X:ssä

Viimeisimmät suosituimmat rahoitukset

Merkittävin