Trending topics

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

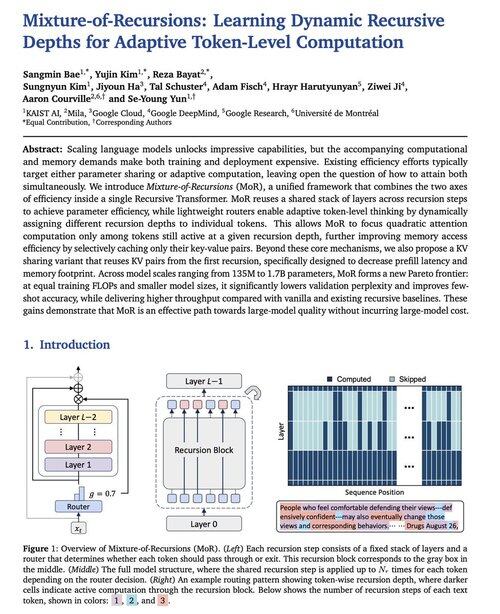

Google DeepMind just dropped this new LLM model architecture called Mixture-of-Recursions.

It gets 2x inference speed, reduced training FLOPs and ~50% reduced KV cache memory. Really interesting read.

Has potential to be a Transformers killer.

Source:

127.26K

Top

Ranking

Favorites