Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Adam Wolff

Claude Code @AnthropicAI 🤖

Avid cook, dedicated snow person, yoga enthusiast

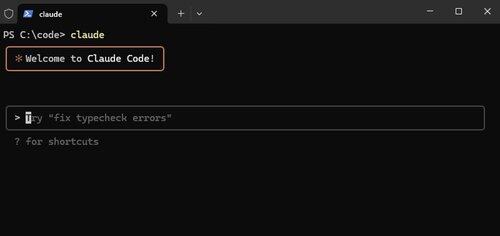

Claude Code, now native on Windows.

Features like this aren't flashy, but they make Claude Code *so* powerful. We want it to run everywhere you do.

Alex Albert15.7. klo 03.07

We have another big update for Claude Code today: it’s now natively available for Windows.

13,04K

"While competition feels like a powerful force, collaboration is the only force more powerful."

@tomocchino ❤️

Ryan Vogel13.7. klo 01.50

check out part one of our newest episode with @tomocchino from @vercel

(thanks again to vercel for letting us film at HQ)

10,21K

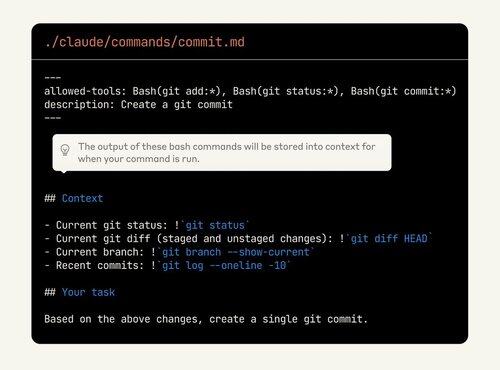

Claude Code is so customizable, but the features are hard to discover. A lot of people are sleeping on the power of custom commands. Now they can even embed bash output!

Take another look if you're not already using these features.

Alex Albert2.7. klo 00.19

As a reminder, slash commands let you store custom prompts as Markdown files and invoke them with /your-command.

With this update, you can now:

- Execute bash commands from slash commands

- @ mention files for context

- Enable extended thinking with keywords within commands

10K

Evals today are like tests were a decade ago. Obviously important, but also unclear exactly how and how much to invest.

This is great advice, but the most important thing is to try. If your product incorporates AI and you don't have evals, you are building a castle made of sand.

shyamal20.5.2025

getting started with evals doesn't require too much. the pattern that we've seen work for small teams looks a lot like test‑driven development applied to AI engineering:

1/ anchor evals in user stories, not in abstract benchmarks: sit down with your product/design counterpart and list out the concrete things your model needs to do for users. "answer insurance claim questions accurately", "generate SQL queries from natural language". for each, write 10–20 representative inputs and the desired outputs/behaviors. this is your first eval file.

2/ automate from day one, even if it's brittle. resist the temptation to "just eyeball it". well, ok, vibes doesn't scale for too long. wrap your evals in code. you can write a simple pytest that loops over your examples, calls the model, and asserts that certain substrings appear. it's crude, but it's a start.

3/ use the model to bootstrap harder eval data. manually writing hundreds of edge cases is expensive. you can use reasoning models (o3) to generate synthetic variations ("give me 50 claim questions involving fire damage") and then hand‑filter. this speeds up coverage without sacrificing relevance.

4/ don't chase leaderboards; iterate on what fails. when something fails in production, don't just fix the prompt – add the failing case to your eval set. over time your suite will grow to reflect your real failure modes. periodically slice your evals (by input length, by locale, etc.) to see if you're regressing on particular segments.

5/ evolve your metrics as your product matures. as you scale, you'll want more nuanced scoring (semantic similarity, human ratings, cost/latency tracking). build hooks in your eval harness to log these and trend them over time. instrument your UI to collect implicit feedback (did the user click "thumbs up"?) and feed that back into your offline evals.

6/ make evals visible. put a simple dashboard in front of the team and stakeholders showing eval pass rates, cost, latency. use it in stand‑ups. this creates accountability and helps non‑ML folks participate in the trade‑off discussions.

finally, treat evals as a core engineering artifact. assign ownership, review them in code review, celebrate when you add a new tricky case. the discipline will pay compounding dividends as you scale.

1,16K

Johtavat

Rankkaus

Suosikit

Ketjussa trendaava

Trendaa X:ssä

Viimeisimmät suosituimmat rahoitukset

Merkittävin