Trendaavat aiheet

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

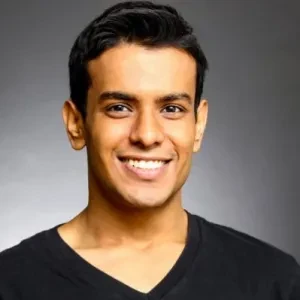

Deedy

Kumppani @MenloVentures. Entinen perustajatiimi @glean, @Google Search. @Cornell CS. Twiittejä tekniikasta, maahanmuutosta, Intiasta, kuntoilusta ja hausta.

Johtavat

Rankkaus

Suosikit