Trending topics

#

Bonk Eco continues to show strength amid $USELESS rally

#

Pump.fun to raise $1B token sale, traders speculating on airdrop

#

Boop.Fun leading the way with a new launchpad on Solana.

Last week @NVIDIA introduced CUDA 13.1, and in it a new parallel computing programming paradigm - tiles. The "traditional" CUDA exposes a single-instruction, multiple-thread (SIMT) hardware and programming model for developers.

1/6

This paradigm allows for the maximum flexibility, but can become tedious and hard to optimize. The tile paradigm takes tensors as the fundamental objects and works from that up. It is an intermediate layer to higher level languages.

2/6

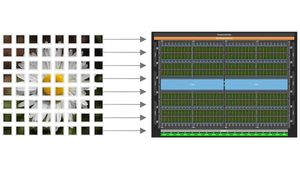

Tile-based programming enables you to program your algorithm by specifying chunks of data, or tiles, and then defining the computations performed on those tiles.

3/6

You don’t need to set how your algorithm is executed at an element-by-element level: the compiler and runtime will handle that for you.

4/6

Interestingly enough, the tile-based programming will be available to Python development first, though cuTile Python. The traditionally top level programming language for CUDA, C++, will be released later.

5/6

38.62K

Top

Ranking

Favorites